As our world embraces a digital transformation, innovative technologies bring greater opportunities, cost efficiencies, abilities to scale globally, and entirely new service capabilities to enrich the lives of people globally. But there is a catch. For every opportunity, there is a risk. The more dependent and entrenched we become with technology the more it can be leveraged against our interests. With greater scale and autonomy, we introduce new risks to family health, personal privacy, economic livelihood, political independence, and even the safety of people throughout the world.

As a cybersecurity strategist, part of my role is to understand how emerging technology will be used or misused in the future to the detriment of society. The great benefits of the ongoing technology revolution are far easier to imagine than the potential hazards. The predictive exercise requires looking ahead to see where the lines of future attackers and technology-innovation intersect. To that end, here is my 2019 list of the most dangerous technology trends we will face in the coming months and years.

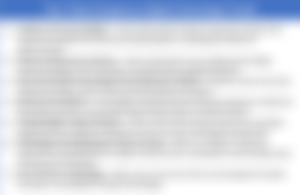

Top 7 Most Dangerous Digital Technology Trends

1. AI Ethics and Accountability

Artificial Intelligence (AI) is a powerful tool that will transform digital technology due to its ability to process data in new ways and at incredible speeds. This results in higher efficiencies, greater scale, and new opportunities as information is derived from vast unstructured data lakes. But like any tool, it can be used for good or wielded in malicious ways. The greater the power, the more significant the impact.

Weak ethics may not seem worthy of being on the list, but when applied to the massive adoption, empowerment, and diversity of use-cases of AI, the results could be catastrophic. AI will be everywhere. Systems designed or employed without sufficient ethical standards can do tremendous harm, intentionally or unintentionally, at an individual and global scale.

Take for example how an entire community or nation could be manipulated by AI systems generating fake news to coerce action, shift attitudes, or foster specific beliefs. We have seen such tactics happen on a limited scale to uplift reputations of shady businesses to sell products, undermine governments, and lure victims into financial scams. By manipulating social media, advertising, and the news, it is possible to influence voters in foreign elections, and artificially drive the popularity of social initiatives, personalities, and smear campaigns. Now imagine highly capable systems to conduct such activities at a massive scale, personalized to individuals, relentless in the pursuit of its goal, that quickly improves itself over time with no consideration of the harm it is causing. AI can not only inundate people with messages, marketing, and propaganda, it can also tailor it to the individuals based upon specific user’s profile data, for maximum effect.

AI systems can also contribute and inadvertently promote inequality and inequity. We are still in the early stages where poor designs are commonplace for many AI deployments. It is not intentional, but a lack of ethical checks and balances result in unintended consequences. Credit systems that inadvertently favored certain races, people living in affluent areas, or those who support specific government policies have already been discovered. Can you imagine not getting a home or education loan because you happen to live on the wrong side of an imaginary boundary, due to your purchasing choices, or your ethnicity? What about being in a video conference but the intelligent system does not acknowledge you as a participant because it was not trained to recognize people with your skin color? Problems like these are already emerging.

AI systems are great at recognizing optimal paths, patterns, and objects by assigning weighted values. Without ethical standards as part of the design and testing, AI systems can become rife with biases that unfairly undermine the value of certain people, cultures, opinions, and rights. This problem propagates, potentially across the spectrum of systems and services leveraging AI, and to impact people through the layers of digital services that play a role in their life, thereby limiting what opportunities they can access.

A more obvious area where AI will greatly contribute to the undermining of trust and insecurity is via synthetic digital impersonations, like ‘deepfakes’ and other forms of forgery. These include videos, voices, and even writing styles that AI systems can mimic, making audiences believe they are interacting or conversing with someone else. We have seen a number of these emerge with political leaders convincingly saying things they never did and with celebrities’ likenesses being superimposed in sexually graphic videos. Some are humorous, others are intended to damage credibility and reputations. All are potentially damaging if allowed to be created and used without ethical and legal boundaries.

Criminals are interested in using such technology for fraud. If cybercriminals can leverage this technology at scale, in real-time, and unimpeded, they will spawn a new market for the victimization of people and businesses. This opens the door to create forged identities that are eerily convincing and will greatly contribute to the undermining of modern security controls.

Scams are often spread with emails, texts, phone calls, and web advertising but have a limited rate of success. To compensate, criminals flood potential victims with large numbers of solicitations anticipating only a small amount will be successful. When someone who is trusted can be impersonated, the rate of success climbs significantly. Current Business Email Compromise (BEC), which usually impersonates a senior executive towards a subordinate, is growing and the FBI estimates it has caused over $26 billion in losses to American companies in the past 3 years. This is usually done via email, but recently attackers have begun to use AI technology to mimic voices in their attempts to commit fraud. The next logical step is to weave in video impersonations where possible for even greater effect.

If you thought phishing and robocalls were bad, standby. This will be an order of magnitude worse. Victims may receive a call, video-chat, email, or text from someone they know, like a coworker, boss, customer, family member or friend. After a short chat, they ask for something: open a file, click on a link, watch a funny video, provide access to an account, fund a contract, etc. That is all it will take for criminals to fleece even security-savvy targets. Anybody could fall victim! It will be a transformational moment in the undermining of trust across the digital ecosystem and cybersecurity when anyone can use their smartphone to impersonate someone else in a real-time video conversation.

Creating highly complex AI tools that will deeply impact the lives of people is a serious undertaking. It must be done with ethical guardrails that align with laws, accepted practices, and social norms. Otherwise, we risk opening the doors to unfair practices and victimization. In our rush for innovation and deployment, it is easy to overlook or deprioritize a focus on ethics. The first generations of AI systems were rife with such bias as the designers focused on narrow business goals and not ramifications of outliers or the unintended consequences of training data that did not represent the cross-section of users. Our general inability to see future issues will amplify the problems.

AI is a powerful enabler and will amplify many of the remaining 6 most dangerous technology trends.

2. Insecure Autonomous Systems

As digital technology increases in capabilities, we are tantalizingly close to deploying widespread autonomous systems. Everyone marvels at the thought of owning a self-driving automobile or having a pet robot dog that can guard the house and still play with the kids. In fact, such automation goes far beyond consumer products. They can revolutionize the transportation and logistics industry with self-operating trucks, trains, planes, and ships. All facets of critical infrastructure, like electricity and water, could be optimized for efficiency, service delivery, and reduced costs. Industrial and manufacturing crave intelligent automation to reduce expenses, improve quality consistency, and increase production rates. The defense industry has long sought autonomous systems to sail the seas, dominate the air, and be the warriors on the ground.

The risks of all these powerful independently-operating systems are that if they are compromised, they can be manipulated, destroyed, held hostage, or redirected to cause great harm to economies and people. Worst-case scenarios are where such systems are hijacked and turned against their owners, allies, and innocent citizens. Having a terrorist take control of fleets of vehicles to cause massive fatalities in spectacular fashion, to turn the regional power or water systems off by criminals demanding a ransom, or manipulating industrial sites with caustic chemicals or potentially dangerous equipment could cause a hazard to nearby communities or an ecological disaster.

Autonomy is great when it works as intended. However, when manipulated maliciously it can cause unimaginable harm, disruption, and loss.

3. Use of Connected Technology to the Detriment of Others

One of the greatest aspects of digital technology is how it has increasingly connected the world. The Internet is a perfect example. We are now more accessible to each other, information, and services than ever before. Data is the backbone of the digital revolution.

However, connectivity is a two-way path. It also allows for unwanted parties to connect, harass, manipulate, and watch people. The impacts and potential scale are only now being understood. With billions of cameras being installed around the world, cell-phones being perfect surveillance devices, and systems recording every keystroke, purchase, and movement people make, the risks of harm are compounded.

Social media is a great example of how growth and connectivity have transformed daily life but have also been used to victimize people in amazing ways. Bullying, harassment, stalking, and subjugation are commonplace. Searching for information on others has never been easier and rarely do unflattering details ever get forgotten on the Internet.

The world of technology is turning into a privacy nightmare. Almost every device, application, and digital service collects data about its users. So much so, companies cannot currently make sense of most of the unstructured data they amass and typically just store what they gather in massive ‘data-lakes’ for future analysis. Current estimates are that 90% of all data being collected cannot be used in its current unstructured form. This data is rarely deleted and is instead stored for future analysis and data mining. With the advance of AI systems, valuable intelligence can be readily extracted and correlated for use in profiling, marketing, and various forms of manipulation and gain.

All modern connected systems can be manipulated, corrupted, disrupted, and harvested for their data regardless if they are consumer, commercial, or industrial grade. If the technology is a device, component, or digital service, hackers have proven to rise to the challenge and find ways to compromise, misuse, or impact the availability of connected systems.

These very same systems can facilitate improved terror attacks and become direct weapons of warfare as we have seen with drones. Terror groups and violent extremists are looking to leverage such technology in pursuit of their goals. In many cases, they take technology and repurpose it. As a result, asymmetric warfare increases across the globe, as connected technology is an economical force-multiplier.

The international defense industry is also keen on connected technology that enables greater weapon effectiveness. Every branch of the U.S. military is heavily investing in technologies for better communication, intelligence gathering, improved target acquisition, weapons deployment, and sustainable operations.

Digital technology can connect and enrich the lives of people across the globe, but if used against them it can suppress, coerce, victimize, and inflict harm. Understanding the benefits as well as the risks is important if we want to minimize the potential downsides.

4. Pervasive Surveillance

With the explosive increase of Internet-of-Things (IoT) devices, growth of social media, and rise in software that tracks user activity, the already significant depth of personal information is being exponentially expanded. This allows direct and indirect analysis to build highly accurate profiles of people which gives insights into how to influence them. The Cambridge Analytica scandal was one example where a company harvested data to build individual profiles of every American, with a model sufficient to sell to clients with the intent to persuade voting choices. Although it has earned significant press, the models were based on only 4 to 5 thousand pieces of data per person. What is available today and in the future about people will dwarf those numbers allowing for a much richer and accurate behavioral profile. AI is now being leveraged to crunch the data and build personality models at a scale and precision never previously imagined. It is used in the advertising and sales, in politics, government intelligence, on social issues, and other societal domains because it can be used to identify, track, influence, cajole, threaten, victimize, and even extort people.

Governments are working on programs to capture all activities of every major social network, telecommunications network, sales transactions, travel records, and public camera. The wholesale surveillance of people is commonplace around the world and is used to suppress free speech, identify dissidents, and persecute peaceful participants in demonstrations or pubic rallies. Such tactics were used during the Arab Spring uprising with dire consequences and more recently during the Hong Kong protests. In the absence of privacy, people become afraid to speak their minds and speak-out against injustice. It will continue to be used by oppressive governments and businesses to suppress competitors and people who have unfavorable opinions or are seen as threats.

Cybercriminals are collating breach data and public records to sell basic profiles of people on the Dark Web to other criminals seeking to conduct attacks, financial and medical fraud. Almost every major financial, government, and healthcare organization has had a breach, exposing rich and valuable information of their customers, partners, or employees. Since 2015, the data market has surpassed the value of the illicit drug markets.

The increasing collection of personal data allows for surveillance of the masses, widespread theft and fraud, manipulation of citizens, and the empowerment of foreign intelligence gathering that facilitates political meddling, economic espionage, and social suppression. Widespread surveillance undermines the basic societal right to have privacy and the long-term benefits that come with it.

5. The Next Billion Cyber Criminals

Every year more people are joining the Internet and becoming part of a global community that can reach out to every other person that is connected. That is one of the greatest achievements of businesses, and an important personal moment for anyone who instantaneously becomes part of the digital world society. But there are risks, both to the newcomers and current users.

Currently, at 4.4 billion internet users, it is expected that there will be 6 billion by 2022. In the next several years another billion people will join. Most of the current users reside in the top industrial nations, leaving the majority of new Internet members to be from economically struggling countries. It is important to know that most of the world earn less than $20 a day. Half of the world earns less than $10 a day and over 10% live on less than $2 a day. For these people, they struggle to put food on the table and provide the basics for their families. Often living in developing regions, they also suffer from dealing with high unemployment and unstable economies. They hustle every day, in any way they can, for self-preservation and to survive.

Joining the Internet for them is not about convenience or entertainment, it is an opportunity for a better life. The internet can be a means to make money beyond the limitations of their local economy. Unfortunately, many of those ways which are available to them are illegal. The problem is social-economic, behavioral, and technical in nature.

Unfortunately, cybercrime is very appealing to this audience, as it may be the only opportunity to make money for these desperate new internet citizens. Organized criminals recognize the availability of this growing cheap labor pool, that are willing to take risks, and makes it very easy for them to join their nefarious activities.

There are many such schemes and scams that people who are desperate may go into. Ransomware, money mules, scam artists, bot herding, crypto-mining malware distribution, telemarketing fraud, spam generation, CAPTCHA and other authentication bypass jobs, and the list goes on. All enabled by simply connecting to the Internet.

Ransomware-as-a-service (RaaS) jobs are by far the biggest threat to innocent people and legitimate businesses. Ransomware is estimated to triple in 2019, causing over $11 billion in damages. RaaS is where the participant simply connects to potential victims from around the world and attempts to convince them to open a file or click a link, to become infected with system impacting malware. If the victim pays to get their files and access restored, the referrer gets a percentage of the extortion.

There are no upfront costs or special technical skills needed. Organized criminals do all the back-end work. They create the malware, maintain the infrastructure, and collect the extorted money. But they need people to do the legwork to ‘sell’ or ‘refer’ victims into the scam. It is not ethical, but it can be a massive payday for people who only earn a few dollars a day. The risks of being caught are negligible and in relative terms, it may enable them to feed their family, put their children in school, or pay for life-saving medicine. For most in those circumstances, the risks are not worthy of consideration as it holds the potential of life-changing new revenue.

Many people are not evil by nature and want to do good, but without options, survival becomes the priority. One of the biggest problems is the lack of choices for legitimately earning money.

A growing percentage of the next billion people to join the Internet will take a darker path and support cybercrime. That is a lot of new attackers that will be highly motivated and creative to victimize others on the Internet, putting the entire community collectively at risk. In the next few years, the cybercrime problem for everyone is going to get much worse.

6. Technology Inter-Dependence House of Cards

Innovation is happening so fast it is building upon other technologies that are not yet fully vetted and mature. This can lead to unintended consequences when layers upon layers of strong dependencies are built upon weak foundations. When a failure occurs in a critical spot, a catastrophic cascading collapse can occur. The result is significantly larger impacts and longer recovery times for cybersecurity incidents.

Application developers are naturally drawn to efficient processes. Code is often reused across projects, repositories shared, and 3rd party dependencies are a normal part of modern application programming. Such building blocks are often shared with the public for anyone to use. It makes perfect sense as it cuts down on organic code development, testing, and increases the speed in which products can be delivered to customers. There is a downside. When a vulnerability exists in well-used code, it could be distributed across hundreds or thousands of different applications, thereby impacting the collective user-base. Many developers don’t check for weaknesses during development or post-release. Even fewer force updates for 3rd party code once it is in the hands of customers.

The same goes for architectures and programs that enhance or build upon other programs or devices. Think of the number of apps on smartphones or personal computers, applets on home internet media devices, or extensions running within web browsers. Cloud is another area where many other technologies are operating in close proximity. The entire world of virtual machines and software containers relies on common infrastructures. We must also consider how insecure the supply chain might be for complex devices like servers, supercomputers, defense systems, business services, voting machines, healthcare devices, and all other critical infrastructure. Consider that entire ‘smart cities’ are already in the planning and initial phases of deployment.

The problem extends well past hardware and software. People represent a significant weakness in the digital ecosystem. A couple of years ago I pulled together the top industry thought leaders to discuss what the future would look like in a decade. One of the predictions was a concerning trend of unhealthy reliance on technology. So much so, we humans may not pass forward how to do basic things and over time the skillset to fix complex technology would distill down to a very small set of people. Increased dependence with less support capability leads to more lengthy recovery efforts.

Imagine a world where Level 5 autonomous cars have been transporting everyone for an entire generation. What happens if the system experiences a catastrophic failure? Who would know how to drive? Would there even be manual controls installed in vehicles? How would techs get to where they needed to be to fix the system? Problems like this are rarely factored into products or the architecture of interconnected systems.

All this seems a bit silly and far-fetched but we have seen it before on a lesser scale. Those of you who remember the Y2k (Year 2000) problem, also called the Millennium bug, where due to coding limitations old software that was running much of the major computer systems needed to be modified to accept dates starting at the year 2000. The fix was not terrible, but the problem was many of the systems used the outdated COBOL programming code and there simply weren’t many people left that knew that language. The human skillset had dwindled down to a very small number which caused tremendous anxiety and a flurry of effort to avoid catastrophe.

We are 20 years from that problem and technology innovation has increased. Similar risks continue to rise as the sprint of advancement stands upon the recently established technologies to reach further upward. When such hastily built structures come crumbling down, it will be in spectacular fashion. Recovery times and overall impacts will be much greater than what we have seen in the past with simple failures.

7. Loss of Trust in Technology

Digital technology provides tremendous opportunities for mankind, but we must never forget that people are always in the loop. They may be the user, customer, developer, administrator, supplier, or vendor, but they are part of the equation. When technology is used to victimize those people, especially when it could have been prevented, there is an innate loss in trust.

As cyber-attacks worsen then Fear, Uncertainty, and Doubt (FUD) begins to supplant trust. Such anxiety towards innovation stifles adoption, impacts investment, and ultimately slows down the growth of technology. FUD is the long-term enemy of managing risk. To properly seek the optimal balance between security, costs, and usability, there must be trust.

As trust falters across the tipping-point, but dependency still exists, it creates a recipe where governments are pressured to quickly react and accelerate restrictive regulations that burden the process to deliver products to market. This adds to the slowdown of consumer spending that drives developers to seek new domains to apply their trade. Innovation and adoption slow, which affects both upstream and downstream areas of other supporting technology. The ripple effects get larger and the digital society misses out on great opportunities.

Our world would be far different if initial fears about automobiles, modern medicine, electricity, vaccines, flight, space travel, and general education, would have stifled these technological advances that pushed mankind forward to where we are today. The same could hold true for tomorrow’s technology if rampant and uninformed fears take hold.

Companies can make a difference. Much of the burden is in fact on the developers and operators of technology to do what is right and make their offerings secure, safe, and respectful of user’s rights such as privacy and equality. If companies choose to release products that are vulnerable to attack, weak to recover, or contribute to unsafe or biased outcomes, they should be held accountable.

We see fears run rampant today with emerging technology such as Artificial Intelligence, blockchain, quantum computing, and cryptocurrencies. Some concerns are justifiable, but much of the distress is unwarranted and propagated by those with personal agendas. Clarity is needed. Without intelligent and open discussions, uncertainty can run rampant.

As an example, governments have recently expressed significant concerns with the rise of cryptocurrencies because it poses a risk to their ability to control monetary policy measures, such as managing the amount of money in circulation, and how it can contribute to crime. There have been frantic calls by legislators, who openly admit to not understanding the technology, to outlaw or greatly restrict decentralized digital currencies. The same distrust and perception of losing control was true back in the day when electricity and automobiles were introduced. The benefits then and now are significant, yet it is the uncertainty that drives fear.

Fear of the unknown can be very strong with those who are uninformed. In the United States, people and communities have had the ability to barter and create their own local currency since the birth of the nation. It is true that cryptocurrency is used by criminals, but the latest statistics show that cash is still king for largely untraceable purchases of illicit goods, the desired reward of massive financial fraud, and as tax evasion tool. Cryptocurrency has the potential to institute controls that enable the benefits of fiat, in a much more economical way than cash, with advantages of suppressing criminals.

Unfounded fears represent a serious risk and the trend is getting stronger for governments and legislators to seek banning technology before understanding what balance can be struck between the opportunities and risks. Adoption of new technologies will always bring elevated dangers, but it is important we take a pragmatic approach to understand and choose a path forward that makes society more empowered, stronger, and better prepared for the future.

As we collectively continue our rapid expedition through the technology revolution, we all benefit from the tremendous opportunities that innovation brings to our lives. Even in our bliss, we must not ignore the accompanying risks that come with the dazzling new devices, software, and services. The world has many challenges ahead and cybersecurity will play an increasing role to address the cyber-attack risks, privacy, and safety concerns. With digital transformation, the stakes become greater over time. Understanding and managing the unintended consequences is important to maintaining continued trust and adoption of new technologies.

- Matthew Rosenquist is a Cybersecurity Strategist and Industry Advisor

Originally published in HelpNetSecurity 12/10/2019

Technology provides tools and all tools are double-edged, even on a primitive level. The invention of the knife, or the axe, revolutionised human work at that stage - but both are tools often used to threaten or kill people as well. Cybertechnology is not different in that aspect; it, too, is double-edged, and we must expect it to be. We talk about tools and tools can always be used as well as abused.

I like your article because you really try to identify the dangers and disadvantages on a broad front - and they need to get more attention.